Evolving Evidence on School Voucher Effects

Summary

School vouchers have long been promoted on the grounds that they improve access to quality educational options. However, recent studies have shown large, negative impacts of vouchers on student achievement. While voucher advocates still argue that a number of older studies show benefits from vouchers, this brief notes that those older studies were based on small-scale programs, and instead offers an analysis that shows the trends and scales of negative effects.

Background

The case for school vouchers has been built predominantly on the hopes that students trapped in underperforming public schools would then have access to more effective educational options. Indeed, many voucher programs are targeted at economically challenged students in under-performing urban schools who would otherwise be unable to afford tuition for presumably more effective private schools.

Researchers have been studying the question of whether those schools are actually more effective since the first voucher program began in Milwaukee in the early 1990s.

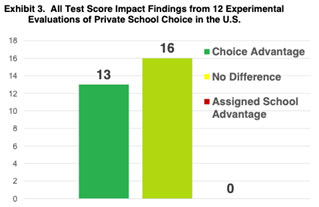

For the most part, school choice proponents have celebrated the findings from a set of studies that appear to show consistently positive impacts for students in these voucher programs. For example, in 2015 testimony to the US Congress, one exhibit touted the findings from 12 studies, noting positive results for multiple groups, with no results showing voucher students falling behind:

The pro-voucher group, EdChoice, regularly updates a list of studies on voucher impacts to indicate the efficacy of these programs, with the most recent arguing that positive impacts on learning were far more frequent than any negative findings.

However, such simplistic representations of the research evidence obscure important factors in understanding the effectiveness and potential of voucher programs. Such “vote-counting” exercises of comparing the numbers of positive, null or negative findings tells us little about key factors, including:

- Program characteristics (eligibility, caps, voucher amount)

- Study size

- Effect sizes overall, or for different subgroups

- Trends in findings

To provide a more nuanced and precise picture of the evidence on school voucher effects on achievement, we examined the different studies of the impacts of these programs in the US.

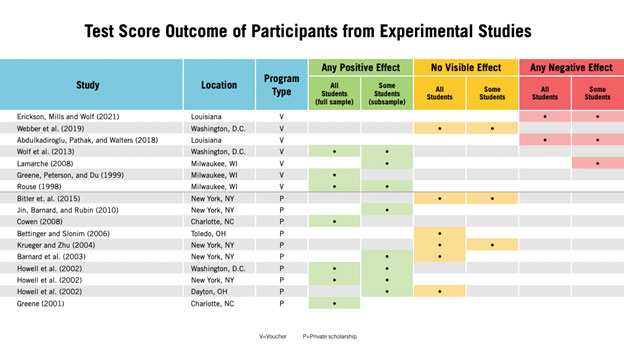

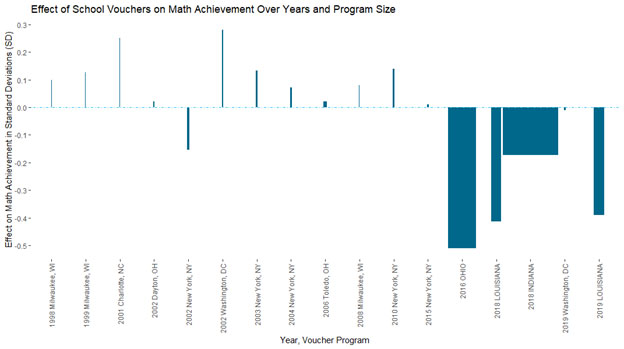

Drawing from the list of studies “nominated” by voucher advocates at EdChoice as worthy of attention, we also included two more recent reports. These two rigorous studies — one in a leading peer-reviewed journal, the other commissioned by a pro-voucher organization — were designed in ways that allow for causal inferences on the impacts of vouchers. The results are plotted in the below timeline.

In this timeline, studies of voucher programs are arranged chronologically from left to right, showing the findings from each study of program effects on voucher students’ math achievement. (For the sake of brevity, we focus here on math, but also note that math is thought to be a better reflection of program effects since math, more so than reading, tends to be learned in school). Impacts are presented in standard deviations from a baseline of 0.0, so that divergences from that line represent impacts on learning.

Moreover, program size at the time of each study (i.e., number of voucher students) is represented by the width of each bar in the graph such that the wider bars represent larger voucher program size.

With this analysis, a number of important insights become available that were not apparent in earlier “votecounting” representations of voucher effects:

- Almost all impacts in early studies tended to be modest, at best, but were also based on rather small programs, typically centered in targeted populations in specific urban areas.

- As programs grew in size, the results turned negative, often to a remarkably large degree virtually unrivaled in education research

- There was a clear correlation between program size and impacts, with smaller programs (and studies) showing modestly positive impacts, while larger programs had large, negative impacts.

Of course, as with the vote-counting representations of voucher research, one should be aware that these programs and studies are not necessarily comparable, so caution is advisable when thinking about these findings as trends over time. For instance, programs differed by scale, scope, eligibility, funding, demand, take-up, etc., while the studies also differed by design, time-spans analyzed, and so forth.

Still, as policymakers seek to expand these policies, this more nuanced view of voucher impacts highlights clear concerns about the detrimental impacts of these programs on student learning.

Authors

Christopher Lubienski, Ph.D., is Director of the Center for Evaluation and Education Policy at Indiana University.

Yusuf Canbolat is a Ph.D. candidate at Indiana University Bloomington in Education Policy studying school choice and organization both in the US and internationally.

Edited by: Jason Curlin, Center for Evaluation and Education Policy